Process Control and Continuous Improvement

Description

| This is some of the most advanced material in this document, but critical to understanding the foundations of Agile methods. |

Once processes are measured, the natural desire is to use the measurements to improve them. Process management, like project management, is a discipline unto itself and one of the most powerful tools in your toolbox. The practitioner eventually starts to realize there is a process by which process itself is managed – the process of continuous improvement. You remain concerned that work continues to flow well, that you do not take on too much work-in-process, and that people are not overloaded and multi-tasking.

In this Competency Category, we take a deeper look at the concept of process and how processes are managed and controlled. In particular, we will explore the concept of continuous (or continual) improvement and its rich history and complex relationship to Agile.

You are now at a stage in your company’s evolution, or your career, where an understanding of continuous improvement is helpful. Without this, you will increasingly find you do not understand the language and motivations of leaders in your organization, especially those with business degrees or background.

The scope of the word “process” is immense. Examples include:

-

The end-to-end flow of chemicals through a refinery

-

The set of activities across a manufacturing assembly line, resulting in a product for sale

-

The steps expected of a customer service representative in handling an inquiry

-

The steps followed in troubleshooting a software-based system

-

The general steps followed in creating and executing a project

-

The overall flow of work in software development, from idea to operation

This breadth of usage requires us to be specific in any discussion of the word “process”. In particular, we need to be careful in understanding the concepts of efficiency, variation, and effectiveness. These concepts lie at the heart of understanding process control and improvement and how to correctly apply it in the digital economy.

Companies institute processes because it has been long understood that repetitive activities can be optimized when they are better understood, and if they are optimized, they are more likely to be economical and even profitable. We have emphasized throughout this document that the process by which complex systems are created is not repetitive. Such creation is a process of product development, not production. And yet, the entire digital organization covers a broad spectrum of process possibilities, from the repetitive to the unique. You need to be able to identify what kind of process you are dealing with, and to choose the right techniques to manage it. (For example, an employee provisioning process flow could be simple and prescriptive. Measuring its efficiency and variability would be possible, and perhaps useful.)

There are many aspects of the movement known as “continuous improvement” that we will not cover in this brief section. Some of them (systems thinking, culture, and others) are covered elsewhere in this document. This document is based in part on Lean and Agile premises, and continuous improvement is one of the major influences on Lean and Agile, so in some ways, we come full circle. Here, we are focusing on continuous improvement in the context of processes and process improvement. We will therefore scope this to a few concerns: efficiency, variation, effectiveness, and process control.

History of Continuous Improvement

The history of continuous improvement is intertwined with the history of 20th century business itself. Before the Industrial Revolution, goods and services were produced primarily by local farmers, artisans, and merchants. Techniques were jealously guarded, not shared. A given blacksmith might have two or three workers, who might all forge a pan or a sword in a different way. The term “productivity” itself was unknown.

Then the Industrial Revolution happened.

As steam and electric power increased the productivity of industry, requiring greater sums of capital to fund, a search for improvements began. Blacksmith shops (and other craft producers such as grain millers and weavers) began to consolidate into larger organizations, and technology became more complex and dangerous. It started to become clear that allowing each worker to perform the work as they preferred was not feasible.

Enter the scientific method. Thinkers such as Frederick Taylor and Frank and Lillian Gilbreth (of Cheaper by the Dozen fame) started applying careful techniques of measurement and comparison, in search of the “one best way” to dig ditches, forge implements, or assemble vehicles. Organizations became much more specialized and hierarchical. An entire profession of industrial engineering was established, along with the formal study of business management itself.

Frederick Taylor and Efficiency

Frederick Taylor (1856-1915) was a mechanical engineer and one of the first industrial engineers. In 1911, he wrote The Principles of Scientific Management [Taylor 1911]. One of Taylor’s primary contributions to management thinking was a systematic approach to efficiency. To understand this, let us consider some fundamentals.

Human beings engage in repetitive activities. These activities consume inputs and produce outputs. It is often possible to compare the outputs against the inputs, numerically, and understand how “productive” the process is. For example, suppose you have two factories producing identical kitchen utensils (such as pizza cutters). If one factory can produce 50,000 pizza cutters for $2,000, while the other requires $5,000, the first factory is more productive.

Assume for a moment that the workers are all earning the same across each factory, and that both factories get the same prices on raw materials. There is possibly a “process” problem. The first factory is more efficient than the second; it can produce more, given the same set of inputs. Why?

There are many possible reasons. Perhaps the second factory is poorly laid out, and the work-in-process must be moved too many times in order for workers to perform their tasks. Perhaps the workers are using tools that require more manual steps. Understanding the differences between the two factories, and recommending the “best way”, is what Taylor pioneered, and what industrial engineers do to this day.

As Peter Drucker, one of the most influential management thinkers, says of Frederick Taylor:

The history of industrial engineering is often controversial, however. Hard-won skills were analyzed and stripped from traditional craftspeople by industrial engineers with clipboards, who now would determine the “one best way”. Workers were increasingly treated as disposable. Work was reduced to its smallest components of a repeatable movement, to be performed on the assembly line, hour after hour, day after day until the industrial engineers developed a new assembly line. Taylor was known for his contempt for the workers, and his methods were used to increase work burdens sometimes to inhuman levels. Finally, some kinds of work simply cannot be broken into constituent tasks. High-performing, collaborative, problem-solving teams do not use Taylorist principles, in general. Eventually, the term “Taylorism” was coined, and today is often used more as a criticism than a compliment.

W. Edwards Deming and Variation

The quest for efficiency leads to the long-standing management interest in variability and variation. What do we mean by this?

If you expect a process to take five days, what do you make of occurrences when it takes seven days? Four days? If you expect a manufacturing process to yield 98% usable product, what do you do when it falls to 97%? 92%? In highly repeatable manufacturing processes, statistical techniques can be applied. Analyzing such “variation” has been a part of management for decades, and is an important part of disciplines such as Six Sigma. This is why Six Sigma is of such interest to manufacturing firms.

William Edwards Deming (1900-1993) is noted for (among many other things) his understanding of variation and organizational responses to it. Understanding variation is one of the major parts of his System of Profound Knowledge. He emphasizes the need to distinguish special causes from common causes of variation; special causes are those requiring management attention.

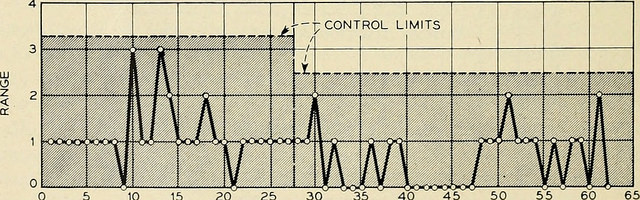

Deming, in particular, was an advocate of the control chart, a technique developed by Walter A. Shewhart, to understand whether a process was within statistical control; see Process Control Chart.

However, using techniques of this nature makes certain critical assumptions about the nature of the process. Understanding variation and when to manage it requires care. These techniques were defined to understand physical processes that in general follow normal distributions.

For example, let us say you are working at a large manufacturer, in their IT organization, and you see the metric of “variance from project plan”. The idea is that your actual project time, scope, and resources should be the same, or close to, what you planned. In practice, this tends to become a discussion about time, as resources and scope are often fixed.

The assumption is that, for your project tasks, you should be able to estimate to a meaningful degree of accuracy. Your estimates are equally likely to be too low, or too high. Furthermore, it should be somehow possible to improve the accuracy of your estimates. Your annual review depends on this, in fact.

The problem is that neither of these is true. Despite heroic efforts, you cannot improve your estimation. In process control jargon, there are too many causes of variation for “best practices” to emerge. Project tasks remain unpredictable, and the variability does not follow a normal distribution. Very few tasks get finished earlier than you estimated, and there is a long tail to the right, of tasks that take two times, three times, or ten times longer than estimated.

In general, applying statistical process control to variable, creative product development processes is inappropriate. For software development, Steven Kan states: “Many assumptions that underlie control charts are not being met in software data. Perhaps the most critical one is that data variation is from homogeneous sources of variation.” That is, the causes of variation are knowable and can be addressed. This is in general not true of development work [Kan 2002].

Deming (along with Juran) is also known for “continuous improvement” as a cycle; e.g., “PDCA” or “Define-Measure-Analyze-Implement-Control”. Such cycles are akin to the scientific method, as they essentially engage in the ongoing development and testing of hypotheses, and the implementation of validated learning. We touch on similar cycles in our discussions of Lean Startup, OODA, and Toyota Kata.

Problems in Process Improvement

There tended to be no big picture waiting to be revealed … there was only process kaizen … focused on isolated individual steps … We coined the term “kamikaze kaizen” … to describe the likely result: lots of commotion, many isolated victories … [and] loss of the war when no sustainable benefits reached the customer or the bottom line. [Womack & Jones 2003]

Lean Thinking

There are many ways that process improvement can go wrong:

-

Not basing process improvement in an empirical understanding of the situation

-

Process improvement activities that do not involve those affected

-

Not treating process activities as demand in and of themselves

-

Uncoordinated improvement activities, far from the bottom line

The solutions are to be found largely within Lean theory:

-

Understand the facts of the process; do not pretend to understand based on remote reports; “go and see”, in other words

-

Respect people, and understand that best understanding of the situation is held by those closest to it

-

Make time and resources available for improvement activities; for example, assign them a problem ticket and ensure there are resources specifically tasked with working it, who are given relief from other duties

-

Periodically review improvement activities as part of the overall portfolio; you are placing “bets” on them just as with new features – do they merit your investment?

Lean Product Development and Cost of Delay

The purpose of controlling the process must be to influence economic outcomes. There is no other reason to be interested in process control. [Reinertsen 1997]

Managing the Design Factory

Discussions of efficiency usually focus on productivity that is predicated on a certain set of inputs. Time can be one of those inputs. Everything else being equal, a company that can produce the pizza cutters more quickly is also viewed as more efficient. Customers may pay a premium for early delivery, and may penalize late delivery; such charges typically would be some percentage (say plus or minus 20%) of the final price of the finished goods.

However, the question of time becomes a game-changer in the “process” of new product development. As we have discussed previously, starting with a series of influential articles in the early 1980s, Don Reinertsen developed the idea of cost of delay for product development [Reinertsen 1997].

Where the cost of a delayed product shipment might be some percentage, the cost of delay for a delayed product could be much more substantial. For example, if a new product launch misses a key trade show where competitors will be presenting similar innovations, the cost to the company might be millions of dollars of lost revenue or more ” many times the product development investment.

This is not a question of “efficiency”; of comparing inputs to outputs and looking for a few percentage points improvement. It is more a matter of effectiveness; of the company’s ability to execute on complex knowledge work.

Scrum and Empirical Process Control

Ken Schwaber, inventor of the Scrum methodology (along with Jeff Sutherland), like many other software engineers in the 1990s, experienced discomfort with the Deming-inspired process control approach promoted by major software contractors at the time. Mainstream software development processes sought to make software development predictable and repeatable in the sense of a defined process.

As Schwaber discusses [Schwaber & Beedle 2002] defined processes are completely understood, which is not the case with creative processes. Highly-automated industrial processes run predictably, with consistent results. By contrast, complex processes that are not understood require an empirical model.

Empirical process control, in the Scrum sense, relies on frequent inspection and adaptation. After exposure to Dupont process theory experts who clarified the difference between defined and empirical process control, Schwaber went on to develop the influential Scrum methodology. As he notes: “During my visit to DuPont … I realized why [software development] was in such trouble and had such a poor reputation. We were wasting our time trying to control our work by thinking we had an assembly line when the only proper control was frequent and first-hand inspection, followed by immediate adjustments” [Schwaber & Beedle 2002].

In general, the idea of statistical process control for digital product development is thoroughly discredited. However, this document covers not only digital product development (as a form of R&D). It covers all of traditional IT management, in its new guise of the digitally transformed organization. Development is only part of digital management.

Evidence of Notability

Continuous improvement is a widely recognized topic in management theory. Notable influences include Shewhart, Deming, and Juran. Lean thinking is often noted for its relevance to continuous improvement. Agile and DevOps are explicitly influenced by ideas from continuous improvement; see, for example, Kim et al. 2016.

Limitations

Like systems thinking, discussions of continuous improvement can appear theoretical and the audience should be considered.

Related Topics